<rant>

The resurgence of AI (well, more specifically, of machine and deep learning) in the last decade has brought about some significant innovations, whether it be in beating humans at Starcraft, creating potential for universal translators, or even detecting cancer.

However, it has also caused significant cultural and social harm. There’s the obvious problems caused by the privacy-invasive and fundamentally creepy analysis that machine learning enables, tracking activity and manipulating behavior en masse based on data points generated from online activity, but that’s not what this post is about.

A more pertinent vein of concern is the simple fact that these black box algorithms shape our lives and our culture, ultimately converging on a monoculture in the chase for optimality. Even in the world of natural language, AI (or, rather, those who weaponize it for profit) has overstepped its bounds.

On AI-Driven Monoculture

I’ve alluded before to the dangers of letting algorithms cater our lives. Like the fantastic documentary The Social Dilemma purports, companies have goals in mind, and their algorithms do whatever is necessary to achieve that goal. That’s simply how machine learning works:

Algorithms are optimised to some definition of success. So if you can imagine, if a commercial enterprise builds an algorithm, to their definition of success, it’s a commercial interest. It’s usually profit.

— Cathy O’Neil, The Social Dilemma

We see the affect of these “success”-oriented, black-box algorithms in a number of cultural domains.

Music

Spotify encourages a musical monoculture by incentivizing songs that game algorithms and help artists get their music onto auto-generated playlists:

Playlists have fundamentally changed the listening experience. [They] limit music discovery and the sound of music itself. Singles are tailored to beat the skip-rate that hinders a song’s chances of making it on to a popular playlist: hooks and choruses hit more quickly. Homogenous mid-tempo pop drawing from rap and EDM has become dominant. The algorithm pushes musicians to create monotonous music in vast quantities for peak chart success. Music appears in danger of becoming a kind of grey goo.

— Laura Snapes, “Has 10 years of Spotify ruined music?”

“How was radio any different?” you might ask. The big difference is the same as it is for all pre-Internet similarities: scale. The sheer scale, breadth, proliferance, and dynamic (relative to radio) nature of playlists on Spotify (or YouTube, or Apple Music, or what-have-you) make them far more widespread and influential on pushing a musical monoculture than radio ever did. Even if you like to stay off the beaten path of pop music, it’s undeniable that many of us give up the uniqueness (and with it, effort) of a personal music library in lieu of playlists curated for the genres we enjoy, artists we follow, or even moods we experience.

Politics

Fascinatingly, rather than herding humanity (well, I’ll speak for America specifically) into ideological monoculture, we instead have increasing polarization into two extremes in the political world.

From the point of view of watch time, this polarization is extremely efficient at keeping people online.

People think the algorithm is designed to give them what they really want, only it’s not. The algorithm is actually trying to find a few rabbit holes that are very powerful, trying to find which rabbit hole is the closest to your interest.

— Guillaume Chaslot, The Social Dilemma

Ironically, a political monoculture would bring about unity (given that by definition most people would be on the same page), but unity does not breed engagement.

Just like before, we see AI manipulating us in order to achieve its goals, and the result of that manipulation is a simplification of our political perspectives, a destruction of individuality, and a transition towards mindless groupthink.

And Now: Language

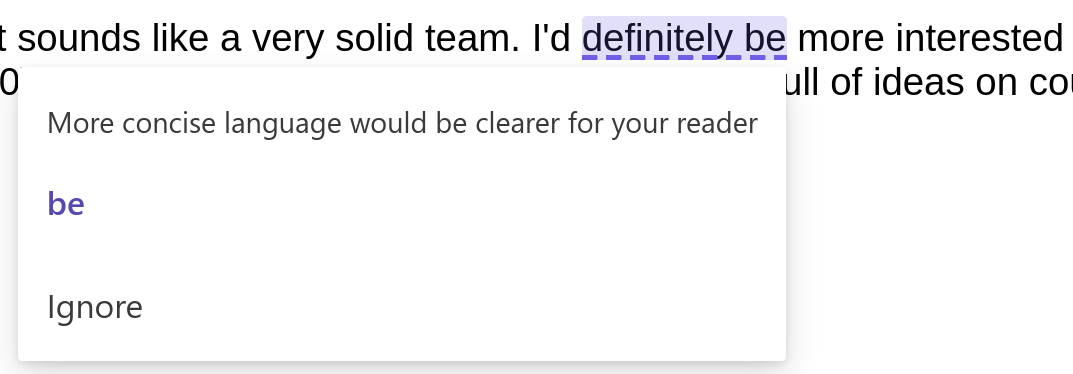

I was writing an email (as one does), and Outlook decided to try to be helpful in simplifying my language:

Clearer for my reader? First of all, you don’t even know my reader. You also obviously don’t understand context or nuance, Cortana. There’s a massive difference between “I’d be more interested” and “I’d definitely be more interested” when it comes to incentives: the latter relays a level of enthusiasm the other doesn’t.

Sure, if the goal is to homogenize the English language into something akin to a programming language, with a “standard library” of helper functions phrases that everyone universally uses to convey over-simplified concepts, then this feature is a good idea.

Newspeak, for readers unfamiliar with the term, is:

a controlled language of simplified grammar and restricted vocabulary designed to limit the individual’s ability to think and articulate “subversive” concepts such as personal identity, self-expression and free will

The concept comes from Orwell’s dystopian novel Nineteen Eighty-Four, and in that novel it’s used to maintain authoritarian control over the populace by literally limiting language to prevent critical thinking (or other “radical” ideas). This concern was also espoused by Huxley in his essay, “Words and Behavior”; “to think correctly is the condition of behaving well,” and the easier we make it to think correctly, the more well-behaved our populace becomes.

Now, this seems like a bit of an extreme comparison to what essentially amounts to a resurgence of Microsoft Clippy, but if Bing can limit a search engine with censorship and Amazon can use doorbells to help police forces, it’s not really a stretch to presume that Microsoft (or Grammarly, or Google) could use their unique positions in the “humans writing things” niche to slowly, carefully alter common phrases or vocabulary in the English language to promote an agenda.1

Dystopias aside, even if there’s no alterior, malevolent motive, there’s still harm being done. These suggestions fundamentally alter how we regularly use language by simplifying it, which limits how well we can articulate our thoughts. Language is already a very “low bandwidth” way to express ourselves: think about how precisely and carefully you need to choose your words and phrasing to convey specific, complex thoughts. Philosophical tomes aside, there’s always room for misinterpretation by your reader no matter how many words you use. In other words, it’s already hard to express ourselves in simple terms, and these auto-generated “helpful” suggestions make that problem worse, not better.

In Conclusion

Back in the day, marketers and advertisers worked hard alongside scientists to find “exploits” in human psychology that they could use to manipulate us into buying things or behaving in certain ways. From that, we got things like color-coordinated advertising (where red & yellow evoke hunger), the center-stage effect (where people prefer things located the middle), price anchoring principles (where overly-expensive items make others look affordable), and the field of consumer psychology in general.

Now, the algorithms do it for us: we’ve built massive systems essentially designed to learn how to exploit human weaknesses. We’re actively engineering our own cultural, social, and psychological destruction in the name of engagement profit.

</rant>

-

As a random aside (and possible spoiler for the Mistborn trilogy), this is somewhat part of the plot in the second Mistborn novel, The Well of Ascension: a “mysterious entity” subtly alters the words of crucial religious doctrine over centuries in order to work towards securing its own release. ↩︎